Automating test runs on hardware with Pipeline as Code

In addition to Jenkins development, during last 8 years I’ve been involved into continuous integration for hardware and embedded projects. At JUC2015/London I have conducted a talk about common automation challenges in the area.

In this blog post I would like to concentrate on Pipeline (formerly known as Workflow), which is a new ecosystem in Jenkins that allows implementing jobs in a domain specific language. It is in the suggested plugins list in the upcoming Jenkins 2.0 release.

The first time I tried Pipeline two and half years ago, it unfortunately did not work for my use-cases at all. I was very disappointed but tried it again a year later. This time, the plugin had become much more stable and useful. It had also attracted more contributors and started evolving more rapidly with the development of plugins extending the Pipeline ecosystem.

Currently, Pipeline a powerful tool available for Jenkins users to implement a variety of software delivery pipelines in code. I would like to highlight several Pipeline features which may be interesting to Jenkins users working specifically with embedded and hardware projects.

Introduction

In Embedded projects it’s frequently required to run tests on specific hardware peripherals: development boards, prototypes, etc. It may be required for both software and hardware areas, and especially for products involving both worlds. CI and CD methodologies require continuous integration and system testing, and Jenkins comes to help here. Jenkins is an automation framework, which can be adjusted to reliably work with hardware attached to its nodes.

Area challenges

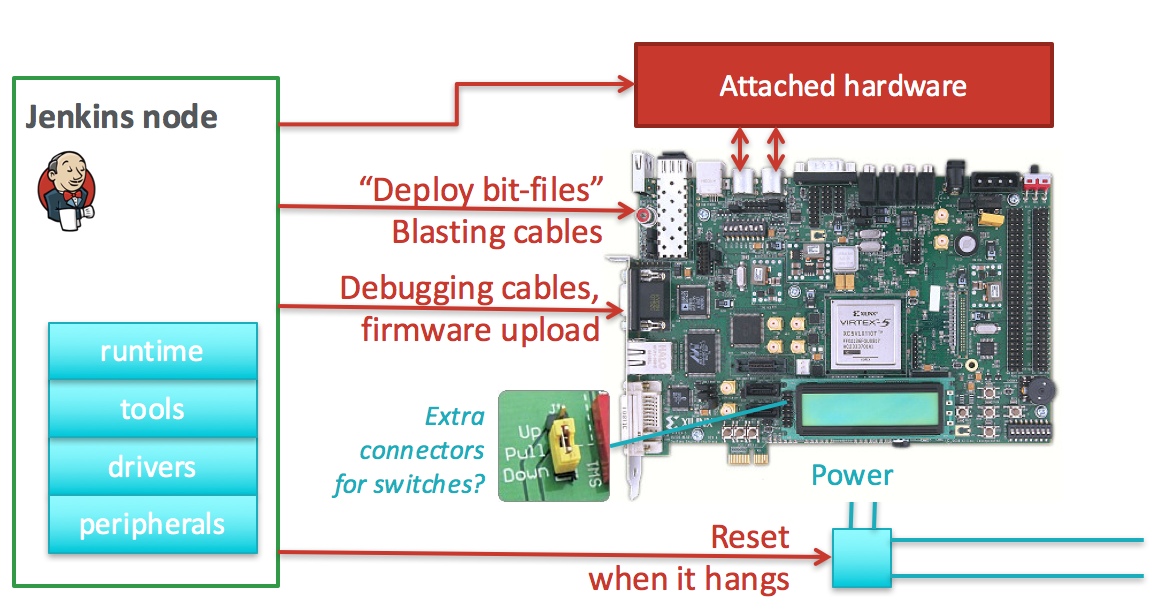

Generally, any peripheral hardware device can be attached to a Jenkins node. Since Jenkins nodes require Java only, almost every development machine can be attached. Below you can find a common connection scheme:

After the connection, Jenkins jobs could invoke common EDA tools via command-line interfaces. It can be easily done by a Execute shell build steps in free-style projects. Such testing scheme is commonly affected by the following issues:

-

Nodes with peripherals are being shared across several projects. Jenkins must ensure the correctness of access (e.g. by throttling the access).

-

In a single Freestyle project builds utilize the node for a long period. If you synthesize the item before the run, much of the peripheral utilization file may be wasted.

-

The issue can be solved by one of concurrency management plugins: Throttle Concurrent Builds, Lockable Resources or Exclusions.

-

-

Test parallelization on multiple nodes requires using of multiple projects or Matrix configurations, so it causes job chaining again.

-

These build chains can be created via Parameterized Trigger and Copy Artifacts, but it complicates job management and build history investigation.

-

-

Hardware infrastructure is usually flaky. If it fails during the build due to any reason, it’s hard to diagnose the issue and re-run the project if the issue comes from hardware.

-

Build Failure Analyzer allows to identify the root cause of a build failure (e.g. by build log parsing).

-

Conditional Build Step and Flexible Publish plugins allow altering the build flow according to the analysis results.

-

Combination of the plugins above is possible, but it makes job configurations extremely large.

-

-

Tests on hardware peripherals may take much time. If an infrastructure fails, we may have to restart the run from scratch. So the builds should be robust against infrastructure issues including network failures and Jenkins controller restarts.

-

Tests on hardware should be reproducible, so the environment and input parameters should be controlled well.

-

Jenkins supports cleaning workspaces, so it can get rid of temporary files generated by previous runs.

-

Jenkins provides support of agents connected via containers (e.g. Docker) or VMs, which allow creating clean environments for every new run. It’s important for 3rd-party tools, which may modify files outside the workspace: user home directory, temporary files, etc.

-

These environments still need to be connected to hardware peripherals, which may be a serious obstacle for Jenkins admins

-

The classic automation approaches in Jenkins are based on Free-style and Multi-configuration project types. Links to various articles on this topic are collected on the HW/Embedded Solution pageEmbedded on the Jenkins website. Tests automation on hardware peripherals has been covered in several publications by Robert Martin, Steve Harris, JL Gray, Gordon McGregor, Martin d’Anjou, and Sarah Woodall. There is also a top-level overview of classic approaches made by me at JUC2015/London (a bit outdated now).

On the other hand, there is no previous publications, which would address Pipeline usage for the Embedded area. In this post I want to address this use-case.

Pipeline as Code for test runs on hardware

Pipeline as Code is an approach for describing complex automation flows in software lifecycles: build, delivery, deployment, etc. It is being advertised in Continuous Delivery and DevOps methodologies.

In Jenkins there are two most popular plugins: Pipeline and Job DSL. JobDSL Plugin internally generates common freestyle jobs according to the script, so it’s functionality is similar to the classic approaches. Pipeline is fundamentally different, because it provides a new engine controlling flows independently from particular nodes and workspaces. So it provides a higher job description level, which was not available in Jenkins before.

Below you can find an example of Pipeline scripts, which runs tests on FPGA board. The id of this board comes from build parameters (fpgaId). In this script we also presume that all nodes have pre-installed tools (Xilinx ISE in this case).

// Run on node having my_fpga label

node("linux && ml509") {

git url:"https://github.com/oleg-nenashev/pipeline_hw_samples"

sh "make all"

}But such scenario could be also implemented in a Free-style project. What would we get from Pipeline plugin?

Getting added-value from Pipeline as code

Pipeline provides much added-value features for hardware-based tests. I would like to highlight the following advantages:

-

Robustness against restarts of Jenkins controller.

-

Robustness against network disconnects.

sh()steps are based on the Durable Task plugin, so Jenkins can safely continue the execution flow once the node reconnects to the controller. -

It’s possible to run tasks on multiple nodes without creating complex flows based on job triggers and copy artifact steps, etc. It can be achieved via combination of

parallel()andnode()steps. -

Ability to store the shared logic in standalone Pipeline libraries

-

etc.

First two advantages allow to improve the robustness of Jenkins nodes against infrastructure failures. It is critical for long-running tests on hardware.

Last two advantages address the flexibility of Pipeline flows. There are also plugins for freestyle projects, but they are not flexible enough.

Utilizing Pipeline features

The sample Pipeline script above is very simple. We would like to get some added value from Jenkins.

General improvements

Let’s enhance the script by using several features being provided by pipeline in order to get visualization of stages, report publishing and build notifications.

We also want to minimize the time being spent on the node with the attached FPGA board. So we will split the bitfile generation and further runs to two different nodes in this case: a general purpose linux node, and the node with the hardware attached.

You can find the resulting Pipeline script below:

// Synthesize on any node

def imageId=""

node("linux") {

stage "Prepare environment"

git url:"https://github.com/oleg-nenashev/pipeline_hw_samples"

// Construct the bitfile image ID from commit ID

sh 'git rev-parse HEAD > GIT_COMMIT'

imageId= "myprj-${fpgaId}-" + readFile('GIT_COMMIT').take(6)

stage "Synthesize project"

sh "make FPGA_TYPE=$fpgaId synthesize_for_fpga"

/* We archive the bitfile before running the test, so it won't be lost it if something happens with the FPGA run stage. */

archive "target/image_${fpgaId}.bit"

stash includes: "target/image_${fpgaId}.bit", name: 'bitfile'

}

/* Run on a node with 'my_fpga' label.

In this example it means that the Jenkins node contains the attacked FPGA of such type.*/

node ("linux && $fpgaId") {

stage "Blast bitfile"

git url:"https://github.com/oleg-nenashev/pipeline_hw_samples"

def artifact='target/image_'+fpgaId+'.bit'

echo "Using ${artifact}"

unstash 'bitfile'

sh "make FPGA_TYPE=$fpgaId impact"

/* We run automatic tests.

Then we report test results from the generated JUnit report. */

stage "Auto Tests"

sh "make FPGA_TYPE=$fpgaId tests"

sh "perl scripts/convertToJunit.pl --from=target/test-results/* --to=target/report_${fpgaId}.xml --classPrefix=\"myprj-${fpgaId}.\""

junit "target/report_${fpgaId}.xml"

stage "Finalization"

sh "make FPGA_TYPE=$fpgaId flush_fpga"

hipchatSend("${imageId} testing has been completed")

}As you may see, the pipeline script mostly consists of various calls of command-line tools via the sh() command.

All EDA tools provide great CLIs, so we do not need special plugins in order to invoke common operations from Jenkins.

| Makefile above is a sample stuff for demo purposes. It implements a set of unrelated routines merged into a single file without dependency declarations. Never write such makefiles. |

It is possible to continue expanding the pipeline in such way. Pipeline Examples contain examples for common cases: build parallelization, code sharing between pipelines, error handling, etc.

Lessons learned

During last 2 years I’ve been using Pipeline for Hardware test automation several times. The first attempts were not very successful, but the ecosystem has been evolving rapidly. I feel Pipeline has become a really powerful tool, but there are several missing features. I would like to mention the following ones:

-

Shared resource management across different pipelines.

-

Runs of a single Pipeline job can be synchronized using the

concurrencyparameter of thestage()step -

It can be done by the incoming Pipeline integration in the Lockable Resources plugin (JENKINS-30269).

-

Another case is integration with Throttle Concurrent Builds plugin, which is an effective engine for limiting the license utilization in automation infrastructures (JENKINS-31801).

-

-

Better support of CLI tools.

-

EDA tools frequently need a complex environment, which should be deployed on nodes somehow.

-

Integration with Custom Tools Plugin seems to be the best option, especially in the case of multiple tool versions (JENKINS-30680).

-

-

Pipeline package manager (JENKINS-34186)

-

Since there is almost no plugins for EDA tools in Jenkins, developers need to implement similar tasks at multiple jobs.

-

A common approach is to keep the shared "functions" in libraries.

-

Pipeline Global Library and Pipeline Remote Loader can be used, but they do not provide features like dependency management.

-

-

Pipeline debugger (JENKINS-34185)

-

Hardware test runs are very slow, so it is difficult to troubleshoot and fix issues in the Pipeline code if you have to run every build from scratch.

-

There are several features in Pipeline, which simplify the development, but we still need an IDE-alike implementation for complex scripts.

-

Conclusions

Jenkins is a powerful automation framework, which can be used in many areas. Even though Jenkins has no dedicated plugins for test runs on hardware, it provides many general-purpose "building blocks", which allow implementing almost any flow. That’s why Jenkins is so popular in the hardware and embedded areas.

Pipeline as code can greatly simplify the implementation of complex flows in Jenkins. It continues to evolve and extend support of use-cases. if you’re developing embedded projects, consider Pipeline as a durable, extensible and versatile means of implementing your automation.

What’s next?

Jenkins automation server dominates in the HW/Embedded area, but unfortunately there is not so much experience sharing for these use-cases. So Jenkins community encourages everybody to share the experience in this area by writing docs and articles for Jenkins website and other resources.

This is just a a first blog post on this topic. I am planning to provide more examples of Pipeline usage for Embedded and Hardware tests in the future posts. The next post will be about concurrency and shared resource management in Pipelines.

I am also going to talk about running tests on hardware at the upcoming Automotive event in Stuttgart on April 26th. This event is being held by CloudBees, but there will be several talks addressing Jenkins open-source as well.

If you want to share your experience about Jenkins usage in Hardware/Embedded areas, consider submitting a talk for the Jenkins World conference or join/organize a Jenkins Area Meetup in your city. There is also a Jenkins Online Meetup.